Assistants¶

Introduction¶

The Assistants API allows you to build AI assistants within your own applications. An Assistant has instructions and can leverage models, tools, and knowledge to respond to user queries. The Assistants API currently supports three types of tools: Code Interpreter, Retrieval, and Function calling.

- Assistants can call OpenAI’s models with specific instructions to tune their personality and capabilities.

- Assistants can access multiple tools in parallel. These can be both OpenAI-hosted tools — like Code interpreter and Knowledge retrieval — or tools you build / host (via Function calling).

- Assistants can access persistent Threads. Threads simplify AI application development by storing message history and truncating it when the conversation gets too long for the model’s context length. You create a Thread once, and simply append Messages to it as your users reply.

- Assistants can access Files in several formats — either as part of their creation or as part of Threads between Assistants and users. When using tools, Assistants can also create files (e.g., images, spreadsheets, etc) and cite files they reference in the Messages they create.

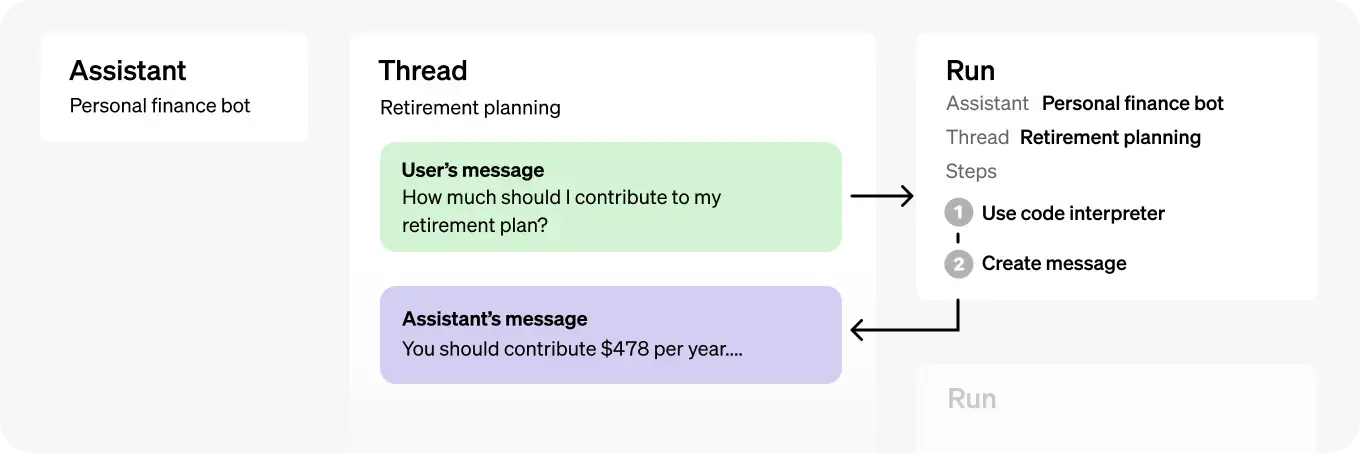

You can explore the capabilities of the Assistants API using the Assistants playground or by building a step-by-step integration outlined in this guide. At a high level, a typical integration of the Assistants API has the following flow:

- Create an Assistant in the API by defining its custom instructions and picking a model. If helpful, enable tools like Code Interpreter, Retrieval, and Function calling.

- Create a Thread when a user starts a conversation.

- Add Messages to the Thread as the user ask questions.

- Run the Assistant on the Thread to trigger responses. This automatically calls the relevant tools.

The Assistants API is in beta. Calls to the Assistants API require that you pass a beta HTTP header. This is handled automatically if you’re using OpenAI’s official Python or Node.js SDKs.

htm

OpenAI-Beta: assistants=v1

How Assistants Work¶

Objects¶

| OBJECT | WHAT IT REPRESENTS |

|---|---|

| Assistant | Purpose-built AI that uses OpenAI’s models and calls tools |

| Thread | A conversation session between an Assistant and a user. Threads store Messages and automatically handle truncation to fit content into a model’s context. |

| Message | A message created by an Assistant or a user. Messages can include text, images, and other files. Messages stored as a list on the Thread. |

| Run | An invocation of an Assistant on a Thread. The Assistant uses its configuration and the Thread’s Messages to perform tasks by calling models and tools. As part of a Run, the Assistant appends Messages to the Thread. |

| Run Step | A detailed list of steps the Assistant took as part of a Run. An Assistant can call tools or create Messages during its run. Examining Run Steps allows you to introspect how the Assistant is getting to its final results. |

Step 1: Create an Assistant¶

An Assistant represents an entity that can be configured to respond to users’ Messages using several parameters like:

- Instructions: Use the instructions parameter to guide the personality of the Assistant and define it’s goals. Instructions are similar to system messages in the Chat Completions API.

- Model: you can specify any GPT-3.5 or GPT-4 models.

- Tools: Use the tools parameter to give the Assistant access to up to 128 tools. You can give it access to OpenAI-hosted tools like

code_interpreterandretrieval, or call a third-party tools via afunctioncalling. - File_ids: Use the file_ids parameter to give the tools like code_interpreter and retrieval access to files. Files are uploaded using the File upload endpoint and must have the

purposeset toassistantsto be used with this API.

In the following example, we're creating an Assistant that is a personal math tutor, with the Code Interpreter tool enabled.

from mlteam_utils import print_object

# In this example, we're creating an Assistant that is a personal math tutor, with the Code Interpreter tool enabled.

from openai import OpenAI

client = OpenAI()

assistant = client.beta.assistants.create(

name="Math Tutor",

instructions="You are a personal math tutor. Write and run code to answer math questions.",

tools=[{"type": "code_interpreter"}],

model="gpt-4o-mini"

)

Step 2: Create a Thread¶

A Thread represents a conversation. We recommend creating one Thread per user as soon as the user initiates the conversation. Pass any user-specific context and files in this thread by creating Messages.

Threads don’t have a size limit. You can add as many Messages as you want to a Thread. The Assistant will ensure that requests to the model fit within the maximum context window, using relevant optimization techniques such as truncation which we have tested extensively with ChatGPT. When you use the Assistants API, you delegate control over how many input tokens are passed to the model for any given Run, this means you have less control over the cost of running your Assistant in some cases but do not have to deal with the complexity of managing the context window yourself.

Organizations that have enabled the Threads page can view Threads created through the Assistants API and Assistants playground. Threads page permissions can be managed in Organization settings.

thread = client.beta.threads.create()

Step 3: Add a Message to a Thread¶

A Message contains text, and optionally any files that you allow the user to upload. Messages need to be added to a specific Thread. Adding images via message objects like in Chat Completions using GPT-4 with Vision is not supported today, but we plan to add support for them in the coming months. You can still upload images and have them processes via retrieval.

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="I need to solve the equation `3x + 11 = 14`. Can you help me?"

)

# Now if you list the Messages in a Thread, you will see that this message has been appended.

thread_messages = client.beta.threads.messages.list(thread.id)

print_object(thread_messages.data)

[

{

"id": "msg_J1xnOOQhiTWsgB8dzmA59iOW",

"assistant_id": null,

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "I need to solve the equation `3x + 11 = 14`. Can you help me?"

},

"type": "text"

}

],

"created_at": 1714312439,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "user",

"run_id": null,

"status": null,

"thread_id": "thread_NwIIUxyNx3h6m3JejynlCDtD"

}

]

Step 4: Run the Assistant¶

For the Assistant to respond to the user message, you need to create a Run. This makes the Assistant read the Thread and decide whether to call tools (if they are enabled) or simply use the model to best answer the query. As the run progresses, the assistant appends Messages to the thread with the role="assistant". The Assistant will also automatically decide what previous Messages to include in the context window for the model. This has both an impact on pricing as well as model performance. The current approach has been optimized based on what we learned building ChatGPT and will likely evolve over time.

You can optionally pass new instructions to the Assistant while creating the Run but note that these instructions override the default instructions of the Assistant.

By default, a Run will use the model and tools configuration specified in Assistant object, but you can override most of these when creating the Run for added flexibility.

Note: file_ids associated with the Assistant cannot be overridden during Run creation. You must use the modify Assistant endpoint to do this.

# Trigger the run

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant.id,

instructions="Please address the user as Jane Doe. The user has a premium account."

)

Step5: Check the Run Status¶

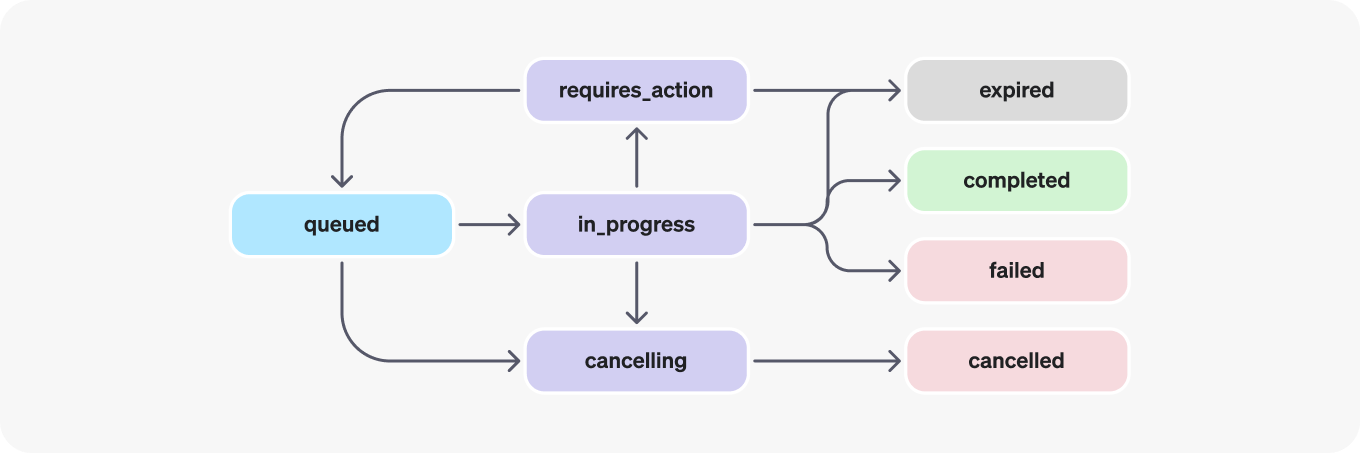

Run objects can have multiple statuses.

| STATUS | DEFINITION |

|---|---|

| queued | When Runs are first created or when you complete the required_action, they are moved to a queued status. They should almost immediately move to in_progress. |

| in_progress | While in_progress, the Assistant uses the model and tools to perform steps. You can view progress being made by the Run by examining the Run Steps. |

| completed | The Run successfully completed! You can now view all Messages the Assistant added to the Thread, and all the steps the Run took. You can also continue the conversation by adding more user Messages to the Thread and creating another Run. |

| requires_action | When using the Function calling tool, the Run will move to a required_action state once the model determines the names and arguments of the functions to be called. You must then run those functions and submit the outputs before the run proceeds. If the outputs are not provided before the expires_at timestamp passes (roughly 10 mins past creation), the run will move to an expired status. |

| expired | This happens when the function calling outputs were not submitted before expires_at and the run expires. Additionally, if the runs take too long to execute and go beyond the time stated in expires_at, OpenAI systems will expire the run. |

| cancelling | You can attempt to cancel an in_progress run using the Cancel Run endpoint. Once the attempt to cancel succeeds, status of the Run moves to cancelled. Cancellation is attempted but not guaranteed. |

| cancelled | Run was successfully cancelled. |

| failed | You can view the reason for the failure by looking at the last_error object in the Run. The timestamp for the failure will be recorded under failed_at. |

When a Run is in_progress and not in a terminal state, the Thread is locked. This means that:

- New Messages cannot be added to the Thread.

- New Runs cannot be created on the Thread.

# By default, the run goes into the queued state. You can periodically retrieve the Run to check on its status to see if it has moved to completed.

import time

while True:

time.sleep(5)

run = client.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id

)

if run.status in ['queued', 'in_progress', 'requires_action', 'cancelling']:

continue

if run.status in ["completed", "expired", "failed", "cancelled"]:

print(f"Run is {run.status}")

break

break

print_object(run)

Run is completed

{

"id": "run_2Esq6yOwnUR5eAZiudJwHAVB",

"assistant_id": "asst_5omknA43lpIUtS5ACC1ODpJI",

"cancelled_at": null,

"completed_at": 1714312456,

"created_at": 1714312446,

"expires_at": null,

"failed_at": null,

"incomplete_details": null,

"instructions": "Please address the user as Jane Doe. The user has a premium account.",

"last_error": null,

"max_completion_tokens": null,

"max_prompt_tokens": null,

"metadata": {},

"model": "gpt-4-turbo",

"object": "thread.run",

"required_action": null,

"response_format": "auto",

"started_at": 1714312446,

"status": "completed",

"thread_id": "thread_NwIIUxyNx3h6m3JejynlCDtD",

"tool_choice": "auto",

"tools": [

{

"type": "code_interpreter"

}

],

"truncation_strategy": {

"type": "auto",

"last_messages": null

},

"usage": {

"completion_tokens": 155,

"prompt_tokens": 656,

"total_tokens": 811

},

"temperature": 1.0,

"top_p": 1.0

}

Step 6: Display the Assistant's Response¶

Once the Run completes, you can list the Messages added to the Thread by the Assistant.

messages = client.beta.threads.messages.list(

thread_id=thread.id

)

#For you to better understand the data structure of the messages

print_object(messages.data)

[

{

"id": "msg_2TlOXBihTPslVWb9hHk3Hm9m",

"assistant_id": "asst_5omknA43lpIUtS5ACC1ODpJI",

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "The solution to the equation \\(3x + 11 = 14\\) is \\(x = 1.0\\)."

},

"type": "text"

}

],

"created_at": 1714312455,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "assistant",

"run_id": "run_2Esq6yOwnUR5eAZiudJwHAVB",

"status": null,

"thread_id": "thread_NwIIUxyNx3h6m3JejynlCDtD"

},

{

"id": "msg_e8wmwcwwsaZW5hZdtooCLMj3",

"assistant_id": "asst_5omknA43lpIUtS5ACC1ODpJI",

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "Sure, Jane Doe! Let's solve the equation \\(3x + 11 = 14\\).\n\nFirst, we'll isolate the \\(x\\) term by subtracting 11 from both sides of the equation:\n\\[3x + 11 - 11 = 14 - 11\\]\n\nThis simplifies to:\n\\[3x = 3\\]\n\nNow, divide both sides by 3 to find \\(x\\):\n\\[x = \\frac{3}{3}\\]\n\nLet's calculate that."

},

"type": "text"

}

],

"created_at": 1714312447,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "assistant",

"run_id": "run_2Esq6yOwnUR5eAZiudJwHAVB",

"status": null,

"thread_id": "thread_NwIIUxyNx3h6m3JejynlCDtD"

},

{

"id": "msg_J1xnOOQhiTWsgB8dzmA59iOW",

"assistant_id": null,

"attachments": [],

"completed_at": null,

"content": [

{

"text": {

"annotations": [],

"value": "I need to solve the equation `3x + 11 = 14`. Can you help me?"

},

"type": "text"

}

],

"created_at": 1714312439,

"incomplete_at": null,

"incomplete_details": null,

"metadata": {},

"object": "thread.message",

"role": "user",

"run_id": null,

"status": null,

"thread_id": "thread_NwIIUxyNx3h6m3JejynlCDtD"

}

]

# Finally display the messages to the user

# messages.data list holds the last message at the index 0, so we reverse the list to see in chronological order.

for message in reversed(messages.data):

print(f"_______Role: {message['role']}_______\n")

# message.content is a list, but I only see 1 item in the list, anyhow used a loop.

for i, content in enumerate(message['content']):

print(f"{content['text']['value']}\n")

_______Role: user_______

I need to solve the equation `3x + 11 = 14`. Can you help me?

_______Role: assistant_______

Sure, Jane Doe! Let's solve the equation \(3x + 11 = 14\).

First, we'll isolate the \(x\) term by subtracting 11 from both sides of the equation:

\[3x + 11 - 11 = 14 - 11\]

This simplifies to:

\[3x = 3\]

Now, divide both sides by 3 to find \(x\):

\[x = \frac{3}{3}\]

Let's calculate that.

_______Role: assistant_______

The solution to the equation \(3x + 11 = 14\) is \(x = 1.0\).

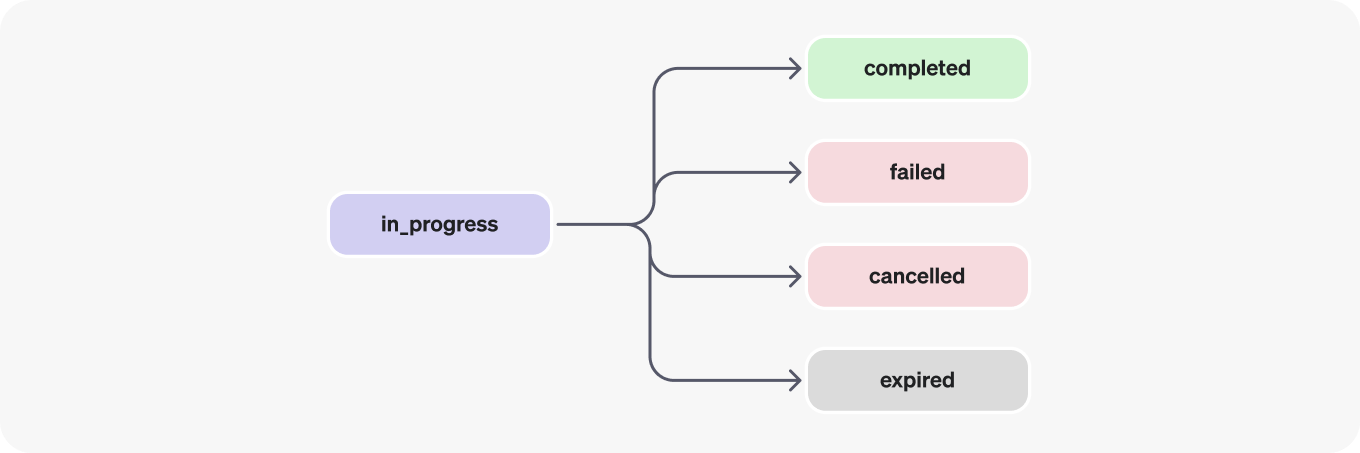

Run Steps¶

Run step statuses have the same meaning as Run statuses.

Most of the interesting detail in the Run Step object lives in the step_details field. There can be two types of step details:

message_creation: This Run Step is created when the Assistant creates a Message on the Thread.tool_calls: This Run Step is created when the Assistant calls a tool. Details around this are covered in the relevant sections of the Tools guide.

run_steps = client.beta.threads.runs.steps.list(

thread_id=thread.id,

run_id=run.id

)

for step in run_steps:

print(f"{step.step_details.type}: {getattr(step.step_details, step.step_details.type)}")

message_creation: MessageCreation(message_id='msg_2TlOXBihTPslVWb9hHk3Hm9m') tool_calls: [CodeInterpreterToolCall(id='call_e7FhPzw7N6VlGSDXKNebTcAv', code_interpreter=CodeInterpreter(input='# Calculating the value of x\r\nx = 3 / 3\r\nx', outputs=[CodeInterpreterOutputLogs(logs='1.0', type='logs')]), type='code_interpreter')] message_creation: MessageCreation(message_id='msg_e8wmwcwwsaZW5hZdtooCLMj3')

Cleanup¶

You can delete your assistant and/or threads.

try:

# Delete the assistant

client.beta.assistants.delete(assistant_id=assistant.id)

# Delete the thread

client.beta.threads.delete(thread_id=thread.id)

except Exception as e:

print(e)